Quick Links

Connect with us

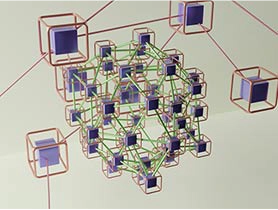

Cloud Data Processing streamlines and automates your total workflows, allowing organisations to free themselves from time-consuming, labour-intensive processes. With an easy-to-use environment, you can easily build and keep data pipelines from multiple sources, leveraging built-in connectors to read, parse, enrich, and transform data according to your business needs.

The platform enables you to apply advanced business logic, automate routine tasks, and shape data for consumption and present it in the right format. Not only reducing operational overhead, but it also removes the risk of human error, allowing your teams to focus on analysis and innovation. Scalable and secure, Data Processing does everything from routine data integration to next-generation analytics and AI workloads. With end-to-end monitoring and governance features, your data is always secure and compliant at every stage—allowing your business to gain actionable insights and stay ahead of the competition in a data-driven world.

System or users can schedule, trigger, or manually start jobs, which typically run in batch, micro-batch, or streaming modes.